Model Check - VibeVoice: Next-Token Diffusion Meets Long-Form Speech Generation

Going over the code and the technical report of the new TTS model from Microsoft Research.

TL;DR for the Busy Reader:

VibeVoice generates up to 90 minutes of multi-speaker conversational audio using next-token diffusion

Introduces ultra-efficient speech tokenizers operating at 7.5 Hz (3200× compression vs. 24kHz audio)

Combines Qwen2.5 language models with token-level diffusion heads for streaming synthesis

Outperforms commercial systems like Gemini 2.5 Pro TTS and ElevenLabs V3 in subjective evaluations

Achieves real-time generation with ~160ms time-to-first-audio latency

Available as open-source 1.5B parameter model (7B model results reported but not yet released)

VibeVoice demonstrates a new approach to text-to-speech synthesis by treating speech as sequences of meaning rather than audio signals. Through efficient tokenization, language models, and architectural design choices, it enables applications in long-form audio generation that were previously challenging to achieve.

The Core Innovation: Next-Token Diffusion for Speech

VibeVoice applies next-token diffusion, a framework introduced in LatentLM that treats input data as sequences of latent vectors generated autoregressively through per step diffusion process. While LatentLM demonstrated this approach for multimodal tasks, VibeVoice adapts it specifically for long-form speech synthesis, combining autoregressive language modeling with diffusion-based audio generation.

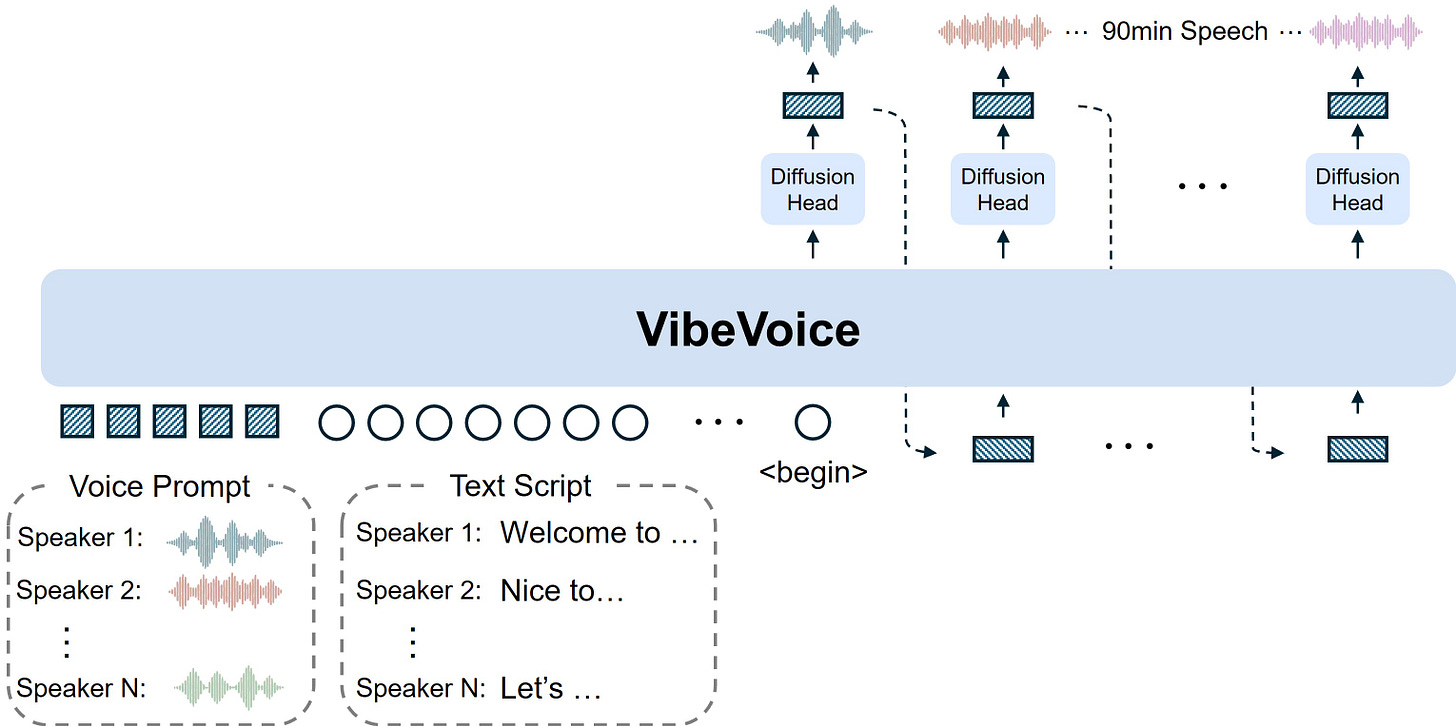

Understanding the Architecture

The system operates on three key components working in concert:

Text + Voice Samples → Language Model → Diffusion Head → Audio Tokenizers → Speech

These components address the unique challenges of long-form generation through several key innovations:

Ultra-Efficient Speech Tokenization VibeVoice introduces dual tokenizers—acoustic and semantic—that achieve 3200× compression rate. Operating at just 7.5 Hz, these tokenizers maintain a speech-to-text token ratio of approximately 2:1, meaning two speech tokens represent roughly one BPE text token. This compression is crucial for fitting 90-minute conversations into manageable context windows.

Token-Level Diffusion Generation Each speech continuous token is generated through a 4-layer diffusion head conditioned on the language model's hidden states.

LLM and the diffusion head are jointly trained end-to-end: the language model and diffusion head are optimized together, allowing the diffusion component to learn conditioning strategies specifically tuned to the language model's representations.

Technical Deep Dive: The Tokenizer Innovation

The speech tokenizers enable VibeVoice's efficient long-form generation through aggressive compression while maintaining quality.

Acoustic Tokenizer: σ-VAE Architecture

The acoustic tokenizer builds on Variational Autoencoder principles but incorporates the σ-VAE variant to prevent variance collapse in autoregressive settings:

# Conceptual representation of the tokenization process

z = μ + σ ⊙ ε # where ε ~ N(0,1), σ ~ N(0,Cσ)

The architecture employs:

7-stage hierarchical encoder/decoder with modified Transformer blocks

1D depth-wise causal convolutions instead of self-attention for streaming efficiency

Six downsampling layers achieving cumulative 3200× compression

~340M parameters per encoder/decoder component

This design enables the tokenizer to compress 24kHz audio to 7.5 tokens per second while maintaining perceptual quality that outperforms much higher-rate alternatives.

Semantic Tokenizer: ASR-Guided Content Preservation

The semantic tokenizer mirrors the acoustic architecture but focuses on content preservation rather than audio fidelity. Trained using Automatic Speech Recognition as a proxy task, it ensures that the semantic content remains intact throughout the compression-decompression cycle.

During training, its output is decoded by Transformer layers to predict text transcripts, aligning the semantic representations with textual meaning. This decoder is discarded after the training.

My Performance Analysis: Where Time Goes in Inference

Component-level timing analysis reveals VibeVoice's computational characteristics:

Language Model: 1064.2ms (45.9%) - Text understanding & speech timing

Diffusion Head: 376.0ms (16.2%) - High-quality latent generation

Acoustic Tokenizer Decode: 345.5ms (14.9%) - Latent → Audio conversion

Semantic Tokenizer Encode: 335.5ms (14.5%) - Audio → Semantic feedback

Acoustic Tokenizer Encode: 25.3ms (1.1%) - Voice conditioning

LM Head: 8.4ms (0.4%) - Token prediction

Preprocessing: 6.8ms (0.3%) - Text processing

Unaccounted/Overhead: 158.5ms (6.8%) - Memory, scheduling, etc.

TOTAL: 2320.1ms (100.0%)

The language model dominates computational cost (45.9%),

Time-to-first-audio: ~160ms (streaming)Streaming Generation: The Feedback Loop

VibeVoice generates coherent long-form audio through a sophisticated feedback mechanism that connects audio generation back to the language model:

Language model processes text + voice context → generates hidden states

Diffusion head conditions on hidden states → produces speech latents

Acoustic decoder converts latents → generates audio segment

Semantic encoder processes generated audio → extracts content feats

Speech connectors transform features → projects content feats to LLM dimensions.

Combined acoustic + semantic embeddings → feed back to language model

They do not give any ablation studies in the paper, but my understanding is that the semantic encoder is necessary to maintain coherence in long-form generation and patch some of the problems that come with lower-rate tokenization.

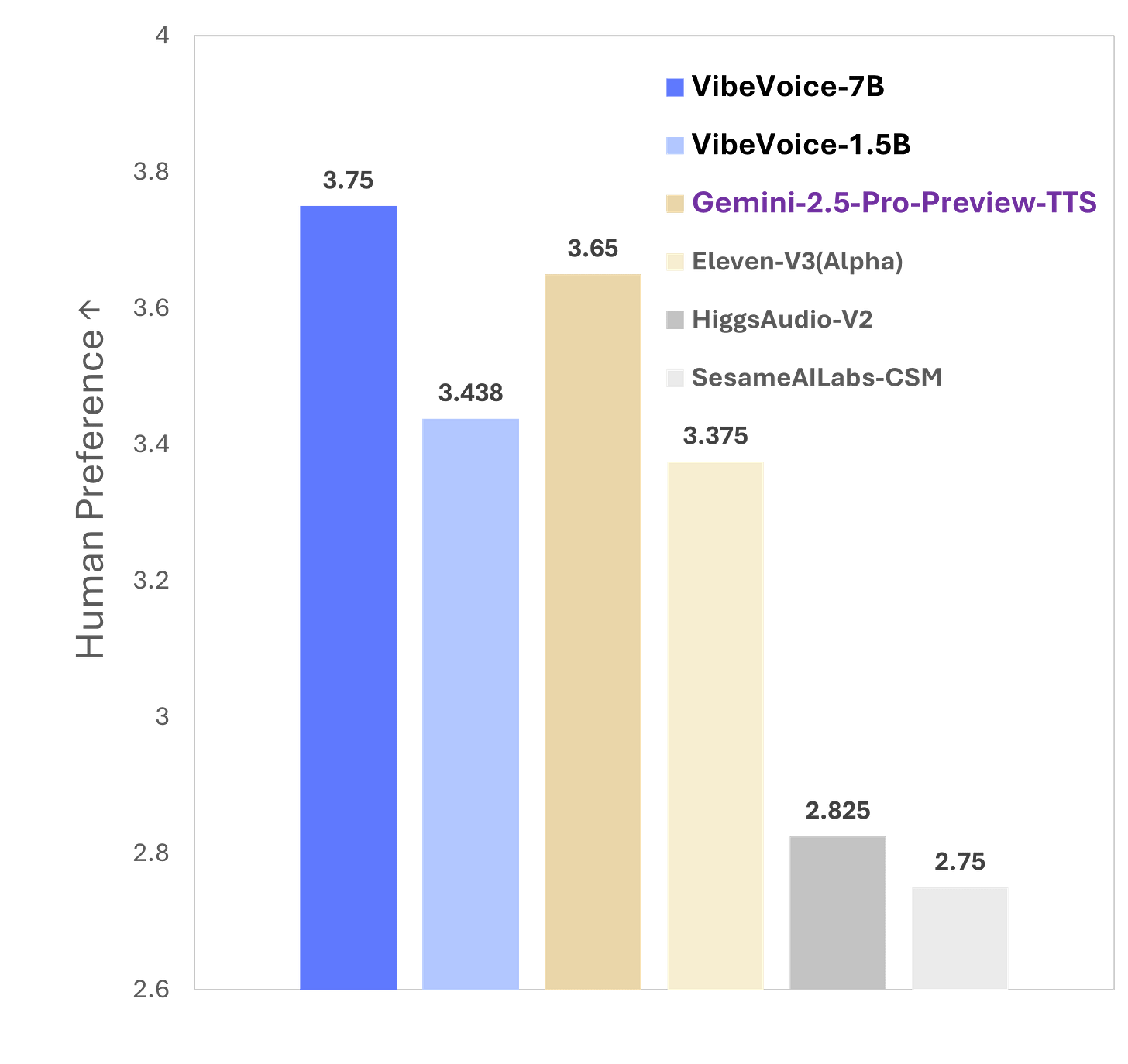

Benchmarking Against the Competition

VibeVoice's performance claims are backed by comprehensive evaluation against commercial systems:

Subjective Evaluation Results

VibeVoice-7B: 3.76/5 overall rating (preference, realism, richness)

Gemini 2.5 Pro TTS: 3.66/5

ElevenLabs V3 Alpha: 3.40/5

SesameAILabs-CSM: 2.89/5

Objective Metrics

Word Error Rate: 1.29% (VibeVoice-7B) vs. 1.73% (Gemini)

Speaker Similarity: 0.692 vs. varied competitor performance

Generation Length: Up to 5,000+ seconds vs. typical <100 seconds

The evaluation methodology used 24 human annotators across 8 long conversational transcripts, totaling about 6 hours of audio per annotator. However, they are missing evaluations for shorter utterances.

The Scaling Story: From 1.5B to 7B Parameters

The comparison between VibeVoice variants reveals interesting scaling properties:

VibeVoice-1.5B: Strong baseline performance, efficient inference (open-source)

VibeVoice-7B: Significant gains in perceptual quality, enhanced cross-lingual capabilities (evaluation results reported, model not yet released)

The 7B model demonstrates particular strength in:

Richer timbre reproduction

More natural intonation patterns

Better voice cloning fidelity

Enhanced multilingual transfer (English/Chinese)

This scaling behavior suggests that larger language models contribute substantially to speech quality, not just text understanding.

Implementation Insights: What the Code Reveals

Examining VibeVoice's implementation reveals several pragmatic design choices:

Curriculum Learning Strategy

The model employs progressive sequence length increases during training: 4,096 → 65,536 tokens. This curriculum approach enables stable training on long sequences while maintaining computational feasibility.

The Diffusion Head: Architecture and Training

Now we examine the diffusion head in detail. Unlike traditional speech synthesis that directly predicts acoustic features, VibeVoice employs a denoising diffusion process to generate high-quality latent representations.

Architecture Components:

4-layer neural network with adaptive layer normalization (AdaLN)

Timestep embedder that encodes diffusion step information via sinusoidal embeddings

Condition projector that transforms language model hidden states into diffusion conditioning

Feed-forward networks with SwiGLU activation for feature refinement

Generation Process: The diffusion head performs 10 steps of progressive denoising, starting from Gaussian noise and using language model hidden states as conditioning. Each step predicts the "velocity" (v-parameterization) rather than noise directly, guided by a cosine schedule.

# Core diffusion head forward pass from VibeVoice

def forward(self, noisy_images, timesteps, condition):

# Project noisy latents to working dimension

x = self.noisy_images_proj(noisy_images)

# Embed timestep information (which diffusion step we're on)

t = self.t_embedder(timesteps)

# Transform LM hidden states to conditioning vectors

condition = self.cond_proj(condition)

c = condition + t # Combine timestep + conditioning

# Apply 4 layers of adaptive normalization + refinement

for layer in self.layers:

x = layer(x, c) # Each layer modulated by conditioning

# Final projection to output space

x = self.final_layer(x, c)

return x # Predicted velocity for this denoising step

Speech Connectors Implementation: The feedback loop relies on speech connectors—simple 2-layer MLPs that transform tokenizer features into language model-compatible representations:

# Actual SpeechConnector implementation from VibeVoice

class SpeechConnector(nn.Module):

def __init__(self, input_dim, output_dim):

super().__init__()

self.fc1 = nn.Linear(input_dim, output_dim)

self.norm = LlamaRMSNorm(output_dim, eps=1e-6)

self.fc2 = nn.Linear(output_dim, output_dim)

def forward(self, features, **kwargs):

x = self.fc1(features) # Project to LM hidden space

x = self.norm(x) # Stabilize with RMSNorm

x = self.fc2(x) # Refine representations

return x

Two identical connectors handle acoustic and semantic features, creating a unified embedding space where both modalities can be processed by the language model. The diffusion process uses classifier-free guidance (scale 1.3) to enhance quality while maintaining efficiency.

Limitations: What VibeVoice Can't Do (Yet)

Scientific objectivity requires acknowledging current limitations:

Language Constraints

VibeVoice currently supports only English and Chinese. Other languages may produce unexpected outputs due to training data limitations.

Shorter or Single Speaker Synthesis

The model focuses purely on longer context speech synthesis. I find it struggling with shorter utterances and pure voice cloning.

Speaker Overlap

Current implementation doesn't model overlapping speech segments, limiting its applicability to natural conversational scenarios where interruptions occur.

Computational Requirements

I took 8GB VRAM max to run the model inference to output 90 secs output.

Technical Takeaways for Practitioners

Several key insights emerge for researchers and practitioners:

Compression Matters: Ultra-low frame rates enable long-form generation without sacrificing quality

Continuous tokens + Diffusion Heads: Continuous AR models with per output diffusion heads can replace the recent multi-codebook (RVQ, FSQ) discreet AR TTS models even with lower frame rates.

Feedback Loops: Semantic feedback enables coherent long-form generation

Scale Effects: Larger language models significantly improve speech quality, not just text understanding

Thanks for reading… 👋

Want to explore VibeVoice yourself? Check out the demo, browse the code, or download the open-source VibeVoice-1.5B model from HuggingFace. The technical report provides additional implementation details for those diving deeper into the architecture.

Found the 7b model under a different HF account -> https://huggingface.co/WestZhang/VibeVoice-Large-pt