Paper check: Softpick, Canon Layers, Parallel Transformer

Going over recent Transformer paper - Softpick, Canon Layers, Parallel Transformer and my takes after benchmarking with BlaGPT

Recently, I've been testing different architectural modifications in my BlaGPT benchmark to see how they affect performance.

I wanted to share what I've learned about three interesting techniques: Softpick, Canon Layers, and Parallel Transformer blocks.

Softpick: A Different Approach to Attention

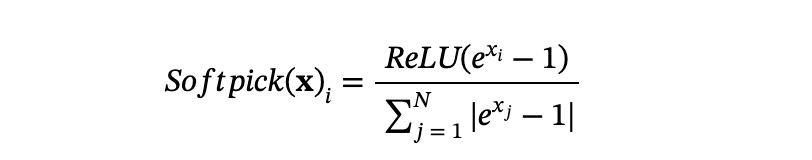

Softpick offers an interesting alternative to the standard softmax function in attention blocks. It has a key difference in how it handles values:

It allows zero values in the numerator

Negative values can contribute to the denominator

Why does this matter? This approach prevents attention sinks - a phenomenon where attention gets stuck focusing on specific tokens (usually early ones).

The 1st order (Jacobian) properties remain similar to regular softmax, so it doesn't disrupt the model's learning dynamics too drastically.

In my testing, Softpick resulted in a slightly worse validation loss compared to standard softmax. However, it completely eliminated attention sinks, which could be valuable for model quantization or longer context lengths.

Canon Layers: Mixing History with Current State

Canon Layers are essentially causal 1D convolutions that combine the current hidden state with previous states. The kernel size determines how many previous states get mixed in.

z = W1*h_t + W2*h_(t-1) + W3*h_(t-2) ...This isn't entirely new - the RWKV has been using a very similar concept. However, the paper demonstrates how these layers can boost performance when added to transformer blocks in various configurations.

One particularly interesting finding is that Canon Layers help models without positional encoding (NoPE) perform on par with models using RoPE (Rotary Positional Encoding).

My experiments confirmed that adding Canon Layers before Attention and MLP blocks noticeably improved model performance.

Parallel Transformer Blocks: Efficiency Gains

Traditional transformer blocks process data sequentially: first through attention, then through an MLP.

Parallel Transformer blocks take a different approach by running these operations simultaneously and combining their outputs:

z = x + MLP(x) + Attention(x)This design was implemented in Google's PaLM models. The main advantage is efficiency - it reduces memory usage and speeds up processing without sacrificing performance.

Some also claim that Google's Gemini models use a similar architecture.

My tests showed that Parallel Transformer blocks delivered validation loss comparable to the baseline sequential approach, but with approximately 15% faster training time. That's a significant speedup without any performance penalty.

Conclusion

After running these experiments, here's what I found most valuable:

Canon Layers provide a clear performance improvement when placed before Attention and MLP blocks

Softpick eliminates attention sinks but at a slight cost to validation loss

Parallel Transformer blocks maintain performance while offering a substantial 15% speedup

👀 All the code for these experiments is available in the BlaGPT repository.